spark can store its data in mongodb - You can create a Spark DataFrame to hold data from the MongoDB collection specified in the spark mongodb read connection uri option which your SparkSession option is using

With the connector you have access to all Spark libraries for use with MongoDB datasets Datasets for analysis with SQL benefiting from automatic schema inference streaming

spark can store its data in mongodb

spark can store its data in mongodb

Spark is a distributed compute engine; so it expects to have files accessible from all nodes. Here are some choices you might consider. There seems to be Spark - MongoDB connector. This post explains.

By exploiting in memory optimizations Spark has shown up to 100x higher performance than MapReduce running on Hadoop A Unified Framework Spark comes packaged

MongoDB Connector For Spark MongoDB Spark

Technical Architecture Different use cases can benefit from Spark built on top of a MongoDB database They all take advantage of MongoDB s built in replication and sharding mechanisms to run Spark on the same large

Building A Listing Approval Tool In MongoDB Retool Blog

The 1 minute data is stored in MongoDB and is then processed in Spark via the MongoDB Hadoop Connector which allows MongoDB to be an input or output

Privileged Plugins Plugins Logseq

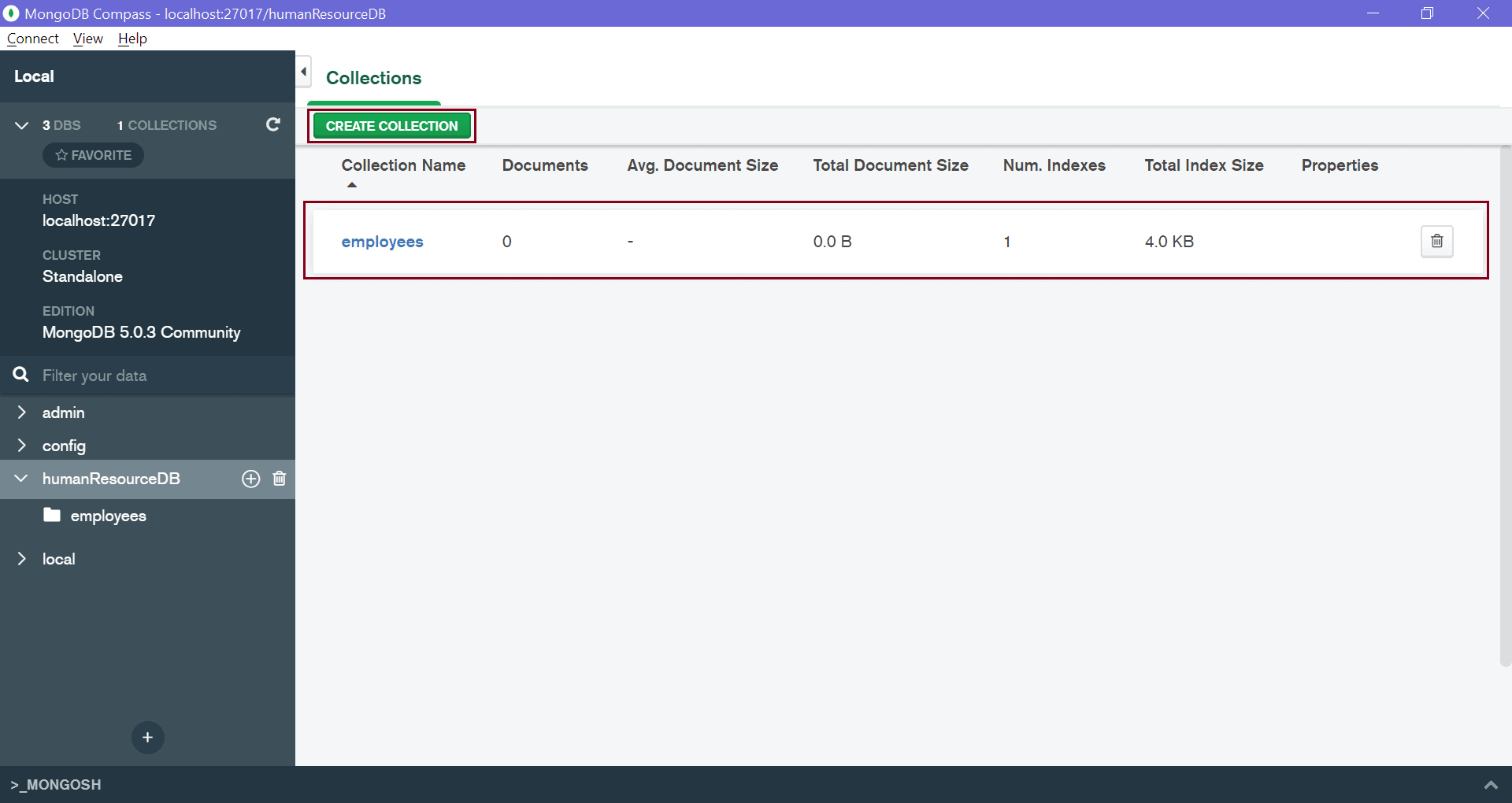

MongoDB Collections

MongoDB Documentation MongoDB Spark Connector

Make sure you have spark3 running on cluster or locally Running MongoDB in docker container docker run d p 27017 27017 name mongo v data data db

How To Delete Selected Multiple Records In A Collection In MongoDB Using MongoDB Compass Stack

Step 1 Import the modules Step 2 Create Dataframe to store in MongoDB Step 3 To view the Schema Step 4 To Save Dataframe to MongoDB Table Conclusion

Read Data from MongoDB. When utilizing the spark.mongodb.input.uri parameter in your SparkSession option, you can build a Spark DataFrame to store data.

Mongodb What Is The Common Practice To Store Users

MongoDB is a powerful NoSQL database that can use Spark to perform real time analytics on its data Examine how to integrate and use MongoDB and Spark together using

Insert Documents MongoDB Compass

How To Change The Location That MongoDB Uses To Store Its Data MongoDB Tutorial For Beginners

spark can store its data in mongodb

Step 1 Import the modules Step 2 Create Dataframe to store in MongoDB Step 3 To view the Schema Step 4 To Save Dataframe to MongoDB Table Conclusion

With the connector you have access to all Spark libraries for use with MongoDB datasets Datasets for analysis with SQL benefiting from automatic schema inference streaming

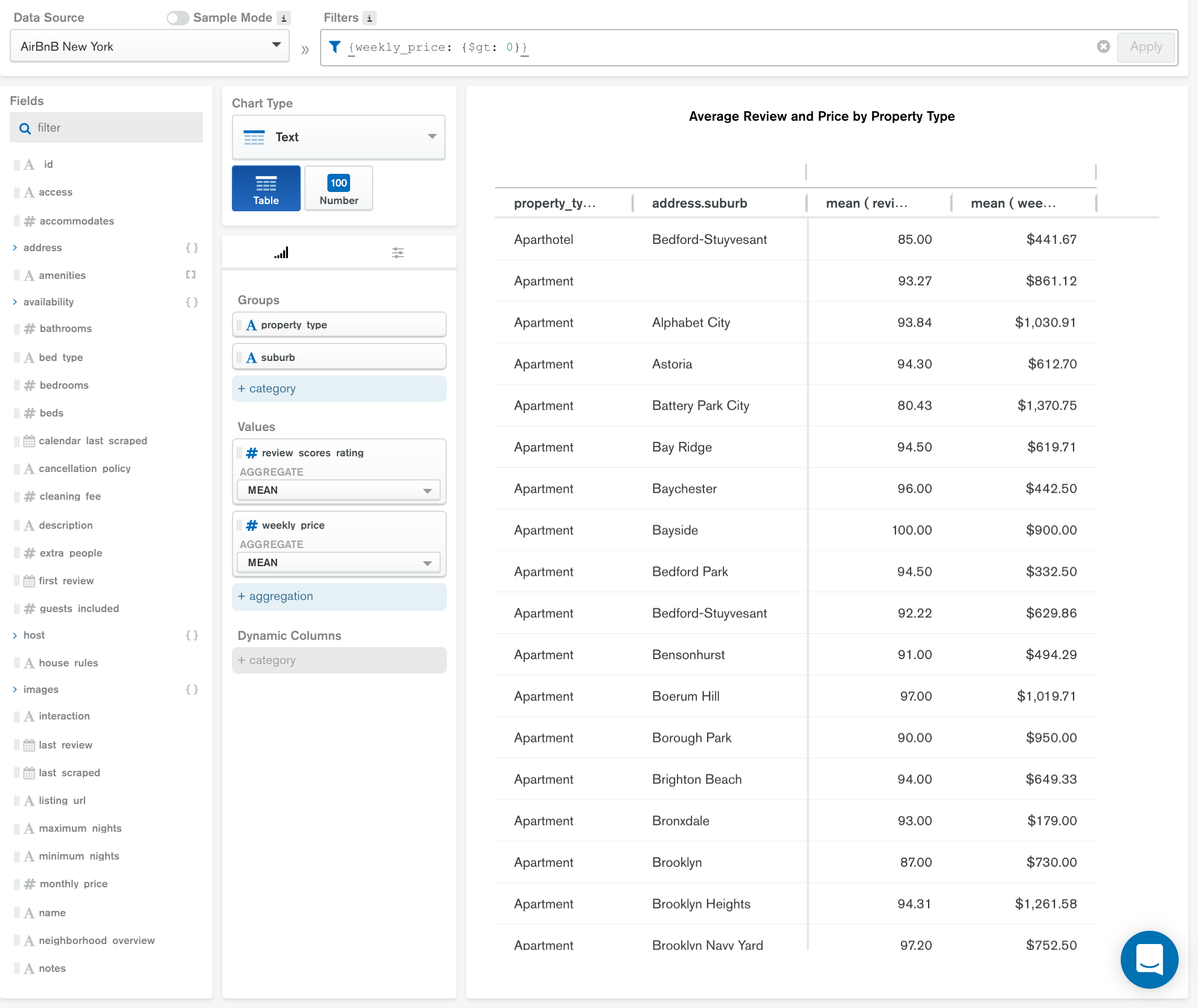

Data Table MongoDB Charts

Click On The Install Upgrade Button And Click OK On The Popup That Asks If You Want To Install

MongoDB Data Modeling Basics Codecademy

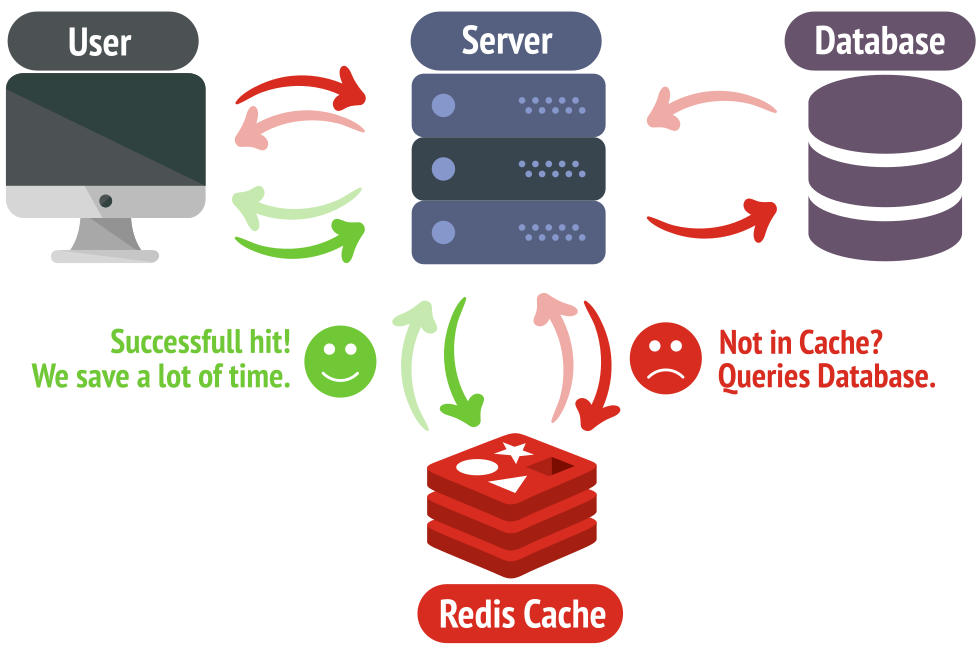

Redis As Cache How It Works And Why To Use It

Scroll Down To Find Your Username Click On The False Next To Your Username And Press The T Key